Perhaps not everyone knows that Google or Bing’s search engine algorithms send crawlers to each site to analyze HTML code, JavaScript, performance, structure, etc.

Wonder why? A fully optimized website needs more than just keyword density, good content, or even a professional SEO service for successful SEO results.

Gone are the days when just ‘keyword stuffing’ was your way up the SERPs. Google’s algorithm has over 200 ranking factors, adding more every month.

So, it makes sense that for your website to perform better in search engine rankings, you must ensure that your strategy is in-depth and without flaws.

In this regard, a “Technical SEO and Audit” can help!

However, technical SEO auditing is complex and difficult for online marketers and business owners without expertise. But, a good way to figure out what you need is to create a technical SEO audit checklist that determines the health of your website.

So, to help you work through some of the most important elements of modern search optimization, we’ve created this complete technical SEO audit guide.

A checklist like this will help online businesses and eCommerce sites determine where they may be lacking. So, let’s walk through our audit guide below to make sure your technical SEO is complete—

Note: While the number of steps in your technical SEO audit checklist will depend on the goals and the type of sites you are going to examine, we aimed to make this checklist universal which includes all the important steps of a technical SEO audit.

What is a Technical SEO Audit?

Despite ‘Technical SEO’ being a topic that only a few of us use rigorously, it is a part of everyone’s life.

Technical SEO audit is a process that examines various technical parts of a website and ensures that they are following best practices for search optimization.

In simple words, it means the technical parts of your site that are directly related to the ranking factors of search engines like Google or Bing.

Most “Technical SEO” elements include HTML, meta tags, website code, JavaScript, and website extensions. [All these elements are important to make your website functional, easy to understand, efficient, user friendly, and visible in SERP.]

For a technical SEO checklist, it is not mandatory to check every part of an SEO campaign. There are several factors for SEO that you should examine separately (including content for on-page SEO best practices, keyword strategy, content, and more).

But technical SEO is considered important because it means checking under the hood of your website for more complex issues that you may not be aware of! Not only that, it also means checking for technical issues that may harm the user experience due to your website’s performance.

Preparatory Steps for Site Audit

Before jumping into the SEO audit process, there are a few preparatory steps you’ll need to go through!

Step 1: Get Access to SEO Audit Tools like Site Analytics and Webmaster

To perform a technical audit of your site, you will need to use a few tools to make the whole process run as smoothly as possible. It’s great if you already have them configured on your website.

Note: While these tools are not necessarily needed to overhaul your site from an SEO audit standpoint – they will make the process far easier and more effective because, with them, you already have a large piece of data needed for a basic site check.

About the SEO audit tools mentioned below, let us tell you that they are (for the most part) free, which is why each one of them is worth a closer look.

So, without wasting any time, look at some of these best SEO audit tools and know what they can be used for—

| Tool | Usage |

| Google PageSpeed Insights: | With this tool, find out how quickly your page loads and get recommendations on how to speed it up. |

| Google’s Structured Data Testing Tool: | This tool helps you see if your structured data is implemented correctly with either Rich Results Test or Schema Markup Validator. |

| Google Analytics: | Using this tool, find user data and trends to optimize your website. |

| Google Search Console: | With this tool, see how well your pages are performing on the Google search engine. |

| SERP Simulator: | This tool helps you see how your article title tag and meta description will appear on the Google search engine results page. |

| Copyscape: | Use this tool to search for copies of your work on the Internet to catch plagiarism. |

| Screaming Frog: | With this tool, crawl your website to find searchability errors that might hurt your SEO. (Up to 500 pages free) |

| SpyFu: | Use this tool for keyword research and keyword gap reports. (Free version is available) |

Step 2: Check the Domain Safety.

If you are auditing an existing website that has dropped from ranking, first rule out the possibility that the domain is subject to any search engine restrictions.

To do this, consider consulting Google Search Console, and if your site has been penalized for black-hat link building or has been hacked, you’ll see a corresponding notice in the Security and Manual Actions tab of the console.

In such a scenario, be sure to address the warning you see in this tab before proceeding with a technical audit of your site. If you need help, Google searches how to deal with manuals and algo penalties.

Conversely, if you’re auditing a new site that’s about to launch, ensure your domain isn’t compromised. About this too, you can Google search how to choose expired domains and how not to get trapped in Google sandbox during a website launch.

These are the two essential steps that you must complete first!

Now that you have completed the preliminary steps for a site audit let’s not waste time and proceed to the Technical SEO checklist to audit your website and increase your organic traffic, step by step.

Getting Started With Technical SEO Audit

Warning: It is worth noting that playing with PHP, servers, databases, compression, minification, etc can ruin your website if you don’t know what you are doing. So, ensure you have a proper backup of files and database before you start playing with these options.

Website Loading Speed Time

On the web, website loading speed time plays an important role in attracting potential traffic. We’re saying this because testing has confirmed that about 50% of users abandon a website if it doesn’t load faster than an average of 3 seconds.

Note: If your website loads slowly, you can lose a lot of visitors.

Where Slow loading speeds mean that a website may lose a lot of visitors, Faster loading speed times mean higher conversions and lower bounce rates.

So, to make your site efficient and easy to access for your users, we have some essential speed optimization suggestions—

With Google’s Speed Test, you can easily and concisely analyze your website’s loading speed time.

The tool has improved over the years, and now it shows useful charts for larger websites to help you understand how each website is performing.

One example is Page Load Distributions: It uses two user-focused performance metrics—first, Contentful Paint (FCP), and the second, DOMContentLoaded (DCL).

While on the one hand, the contentful paint marks the first bit of content on the screen when the browser starts rendering pixels. DOMContentLoaded, on the other hand, marks the moment when the DOM is ready, and no stylesheet is stopping the JavaScript execution.

Therefore, these two metrics show what percentage of content loads faster and what needs improvement when viewing those pages with average and slow speeds.

Another example includes speed and optimization indicators that show where each website is located via FCP and DCP scores.

Another example includes the speed and optimization indicators which show where each website is situated through FCP and DCP scores. These two metrics use data from the Chrome user experience and indicate a page’s average FCP and DCL rank.

Improve Server Response Time

You might consider improving your server response time, which refers to loading the HTML code to begin rendering the page from your server. In simple words, when you access a page, it sends a message to the server, and the time taken to show it to you is considered the server response time.

While there are many reasons why a website has a slow response time, Google announces just a few of them.

However, no matter what, Google says you should keep the server response time under 200ms.

So, to follow this instruction from Google, you need to execute these 3 steps that test and improve server response time:

- First, you have to collect the data and observe why the server response time is high.

- Once this is done, measure your server response times to identify and fix any performance bottlenecks in the future

- Lastly, you’ve to monitor any regression.

However, it is worth adding here that the reason for the slow loading of a website is mostly the server itself. Therefore, choosing a high-quality server from the beginning is very important, as moving the site from server to server may seem easier in theory.

But in reality, it can have a range of potential problems, such as file size limit, wrong PHP version, and so on.

Moreover, let’s admit that sometimes it can be difficult to choose the right server even because of the price.

So, we’ve made it easier for you with this— If you are a multinational corporation, you probably need dedicated servers, which are expensive, and if you are just starting with a blog, shared hosting services (usually cheaper) will probably suffice. However, just don’t go after the cheapest or the most famous. You need to explore a bit and then decide because Hostgator for example, has excellent shared hosting services for the US but not so excellent VPS.

Optimize & Reduce Image Size Without Affecting the Visual Appearance

When a website loads slowly, the first reason that comes to mind is related to the images and all the information an image has!

Maybe you’re using large images (not in terms of size on screen but size on disk.) Or, you never know, maybe the images downloaded too many bytes on a page make the server take more than the usual time to load all the information.

So, what you can do is optimize the page, which removes the extra bytes and irrelevant data. When you do this, the server will work faster. In other words, the fewer bytes that are downloaded by the browser, the faster the speed of the browser downloading and rendering the content on the screen.

With respect to this, let us mention that there are a lot of solutions to compress images, and considering that only, here are some tips and recommendations to help you optimize your images—

- Use PageSpeed Insights.

- Compress images automatically in bulk with dedicated tools (tinypng.com, compressor.io, optimizilla.com) and plugins (WP Smush, CW Image Optimizer, SEO Friendly Images) and so on.

- Use GIF and PNG formats because they are lossless. In regard to this, PNG is the desired format because the best compression ratio with better visual quality can be achieved by PNG formats.

- Convert GIF to PNG if the image is not an animation.

- Remove transparency if all the pixels are opaque for GIF and PNG.

- Reduce quality to 85% for JPEG formats because that way, you reduce the file size and don’t visually affect the quality.

- Use progressive format for images over 10k bytes.

- Prefer vector formats because they are resolution and scale-independent.

- Remove unnecessary image metadata, such as camera information and settings.

- Use the option to “Save for Web” from dedicated editing programs.

Note: While Google PageSpeed Insights recommended using a newer format of images such as JPEG2000 or WEBP in 2019, not all browsers and devices display these formats well. Nevertheless, GIF, PNG, and JPEG are the most commonly used extensions for an image. Therefore, regular image compression is still recommended.

Minimize the CSS and Render-Blocking Javascript

When you run a speed test with Google’s PageSpeed Insights, you’ll see this message: “Eliminate render-blocking JavaScript and CSS in above-the-fold content in case you have some blocked resources that cause a delay in rendering your page.”

In addition to pointing resources, the tool also provides some great technical SEO tips regarding— ‘Removing render-blocking JavaScript’ and ‘Optimizing CSS delivery.’

Therefore, you can remove render-blocking JavaScript by following Google’s guidelines and also avoid/minimize the use of blocking JavaScript via these three methods— Inline JavaScript; Make JavaScript Asynchronous, and Defer loading of JavaScript.

However, if Google detects a page that delays the time to first render because of containing ‘blocking external stylesheets,’ you should optimize CSS delivery. For doing this, you have two options—

- For small external CSS resources, it is suggested to inline a small CSS file and help the browser render the page.

- For large CSS files, you will need to use Prioritize Visible Content to reduce the size of the content above the fold, the inline CSS needed to render it, and then you can defer loading the rest of the style.

Furthermore, it is to be mentioned that PageSpeed shows which files need to be optimized through the minifying technique.

This tool will point to a list of HTML resources, CSS resources, and JavaScript resources based on the situation. For each kind of resource, you have the following individual options—

- HTMLMinifier to minify HTML.

- CSSNano and csso to minify CSS.

- UglifyJS to minify JavaScript.

As explained by Ilya Grigorik, a web performance engineer at Google, these are 3 processes that need to be followed in the minifying process:

- Compress the Data: Once you have exhausted the unnecessary resources, you need to compress the resources the browser needs to download. This process involves reducing the size of the data to help the website load the content faster.

- Gzip compression. It is best used for text-based data. In the process, you can compress web pages and style sheets before sending them to the browser. This works wonders for CSS files and HTML because these types of resources contain a lot of frequent text and white space. The good part of Gzip is that it temporarily replaces similar strings within a text file to shrink the overall file size.

- Optimize the Resources. Based on the type of information you want to provide on your site, make a list of your files to avoid having irrelevant data and keep only what is relevant. After deciding what information is relevant to you, you’ll be able to see what kind of content-specific optimizations you’ll need to do.

Reduce the Number of Resources & HTTP Requests

Next, you can consider reducing the number of resources to increase website speed.

When a user enters your website, a call is made to the server to access the requested files, and the larger the size of those files, the longer it takes to respond to the requested action. In fact, fast, multiple requests will slow down the server.

To avoid this, what best you can do is reduce the overall download size by removing unnecessary resources (such as sliders) and then compressing the remaining resources.

Another thing you can do is to combine the CSS and JS files into one file so that 1 single request can be made. To do this, you can use Plugins like Auto-optimize and W3 Total Cache.

Using the combine option, the plugin merges all the CSS and JS files into a single file. In this way, the browser only has to make one request to the server for all those files, instead of one request for each file.

Note: Since this option can break the entire site or make it messy, ensure that you have a proper backup of files and databases before making any changes.

Consider Having a Browser Cache Policy

It is worth mentioning that the browser cache automatically saves resources in the user’s computer when they visit a new website for the first time, and those resources will help users get the desired information at a faster speed when they enter the same site for the second time.

Therefore, the best way to significantly improve page speed load is to take advantage of the browser cache and set it up as per your needs. For this, you can use W3 Total Cache or any other caching plugin that suits you best.

Note: Using a cache will also make it harder to detect changes. So, if you make any changes to your website, go to the Incognito tab to see those changes, and then, come back to the plugin settings to reset the cache (whenever needed).

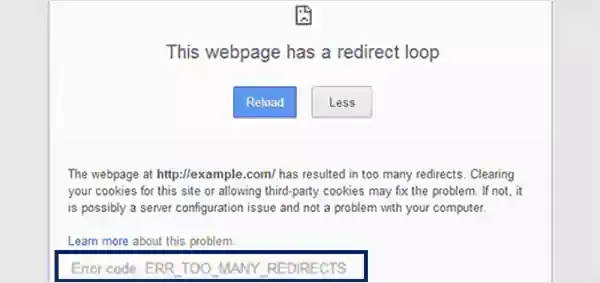

Limit the Number of Redirects and Remove the Redirect Loop

While redirects can save you a lot of trouble with regards to link equity/juice and broken pages, they will slow down your websites if they are in large numbers.

To be precise, the higher the number of redirects, the longer it takes for the user to land on the landing page.

So, make sure you only have one redirect for a page, otherwise, you risk having a redirect loop (a series of redirects for the same page), which is confusing because then the browser won’t know which page to show and will give a very lousy error at the end.

However, even in that scenario, there are many ways to customize the 404-error page and give some guidelines to users so that you don’t lose them. Basically, you can design a friendly page to redirect your users back to your homepage or any other related or relevant content.

In the meantime, you can use Google Search Console, and Site Explorer on any of the new Technical SEO Site Audit tools to crawl and analyze all redirects on your site.

Don’t Let Your Site Load With Too Much Content

Needless to mention, over time, sites get filled with useless images, plugins, and functions that are of no use.

For example, ‘Sliders.’ They routinely load your site with a lot of things you don’t need, such as a JavaScript file. And, if you have 5 or more slides on your homepage with an equal number of big-enough images, then your site may be 2 or 3 times slower because of the size of the bytes of the images.

However, a good solution for this is some kind of development environment where you can test 5-10 plugins until you find exactly what you need. Then, create a plan of implementation so that you know the essentials you need to install on the live version.

After that, you can reset it by deleting the development version and copying the updated live version to it. This way, the live version will also not be clogged and more or less resemble the live version.

“This is how you can make your website faster and reduce loading times by following the recommendations of Google Speed Insights and Google Developer Guidelines.”

Website Visibility, Functionality, User-Friendliness & Usability

Now that you are sure that your website can load faster for your users, let’s move on to see what you can do to improve your website’s visibility in search engines.

Make Sure Your Content is Visible

While content may not be a ‘technical’ SEO ranking factor, it is definitely a ranking factor and one of the good steps for your technical SEO site audit.

So, it’s always a good idea to make sure your website content is visible to search engines. In fact, you can check your site’s homepage, category pages, and product pages to ensure that your content is visible not only to humans but also to Googlebot.

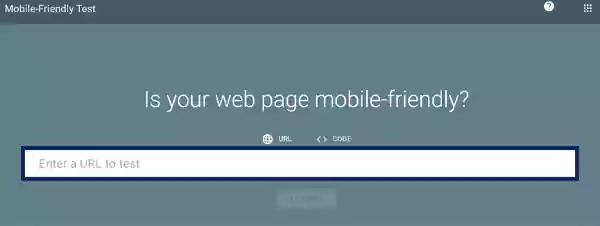

Make Sure Your Website Is Mobile Friendly

Google has prioritized mobile indexation as more than 50% of users worldwide are using their mobile devices to browse the Internet. Therefore, you should also make sure that your website is optimized (in terms of design, speed, and functionality) for mobile devices.

Now, with that mentioned, we suggest you make sure that your site is mobile-friendly by testing it on Google’s Mobile Friendly Test Page.

Generally, a responsive design is preferred rather than a completely separate mobile version. And, to achieve this, Google recommends using AMP (Accelerated Mobile Pages) to improve the UX (user experience) which is highly valued by the company.

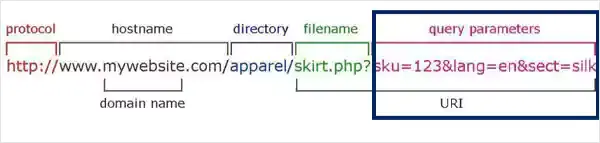

Create Search Engine-Friendly URLs

For a website, URLs are very important, and changing them is not a good idea because if you change the page URL frequently, it will create problems in the search engines.

Therefore, you have to get them right the first time and note that it is useful for both users and search engines to have URLs that are descriptive and keyword-rich. But generally speaking, many people often forget this and create websites with dynamic URLs that are not optimized at all.

While Google accepts them too and they can rank, eventually, you will reach the point where you have to merge in new ones to improve performance, UX, and search engine visibility and that will be a struggle!

So, to make a long story short, it is recommended to create an easy-to-follow URL only at the beginning

To do so, you can keep in mind these 5 tips—

- Instead of underscores (_), use dashes (-).

- Make a short URL.

- Use the keyword (focus keyword).

- Think about your users and focus on user experience (basically look at every aspect of the link from the user’s perspective).

- Avoid having query parameters in the URL.

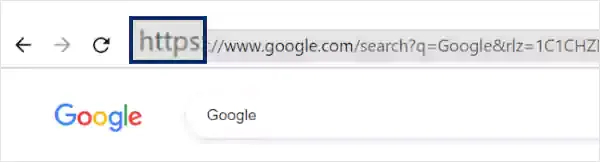

Use HTTP – A Secure Protocol

In the year 2014 Google announced that the HTTPS protocol was on their new ranking factor list and recommended everyone move their sites from HTTP to HTTPS (Hypertext Transfer Protocol Secure).

Precisely because HTTPS encrypts data and does not allow it to be modified or corrupted during transfer, protecting it from man-in-the-middle attacks.

Not only this, but it also has to offer other benefits, such as

- It helps in increasing the ranking of your website, as it is one of the ranking factors of Google.

- It assures users that the website is safe to use and the data provided is encrypted to avoid hacking or data leaks.

- It provides referrer details included in Google Analytics under ‘Direct’ traffic sources.

That being said, if you change your website URL to use HTTPS, a lock will appear in front of the URL in the navigation bar:

However, if your website URL does not use the HTTPS protocol, you will see a notification icon and when you click on it, you will get an alert that the connection is not secure, so the website is also not secure.

Note: Although it’s best to move from HTTP to HTTPS, it’s equally important to find the best way beforehand to recover all of your data after you’ve moved your website.

Set All Versions to Point to the Correct Preferred Version

Another good thing to mention here is that you should make sure that all other versions of your website are pointing to the correct, preferred version.

For example: If your preferred version is https://www.site.com, then all other versions (such as http://site.com and https://site.com) should be 301 directly to that version.

Note: You can also check if this is alright by using the SEO Audit Tool.

Make Sure You Have Crawlable Resources

For those who don’t know, ‘Crawling’ is one such step that comes right before indexing and puts your content in the hands/eyes of the user. To be more specific, Googlebot finds your site, crawls your data, and sends it to the indexer which renders the page and after that, (if you are in luck) you will see the ranking of that page in the SERP.

However, if you have non-crawlable resources, then this is a significant search engine optimization technical issue. So, just ensure having crawlable resources.

Note: Don’t block Googlebot from crawling your JavaScript or CSS files because only then will Google be able to render and interpret your web pages like modern browsers do.

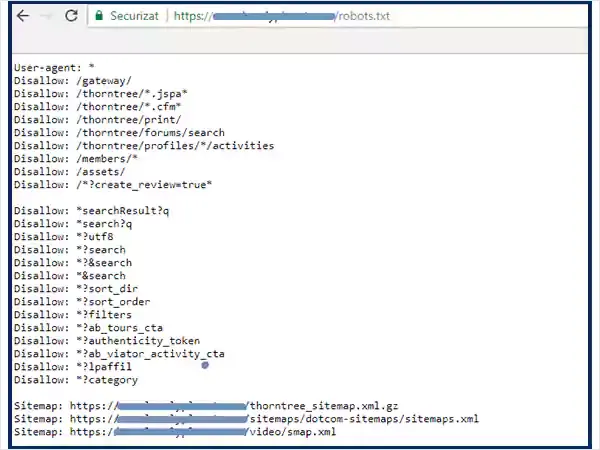

Show Google the Right content by Testing Your Robots.Txt File

If your website experiences crawling issues, be aware that they are usually related to the robots.txt file. So, if you test your robots.txt file, you will be able to give Googlebot access to your data to tell which pages to crawl and which ones not to crawl.

To view and test your robots.txt file online, all you need to do is search for http://domainname. com/robots.txt. There, make sure the order of your files is correct like this—

Note: If the files are not in the correct order, you can use robots.txt Tester tool from Search Console to write or edit the robots.txt files for your site.

The robots.txt checker tool is quite easy to use and it also shows you whether your robots.txt file blocks Googlebot web crawlers from specific URLs.

When Googlebot is blocked unable to crawl specific URLs due to robots.txt restrictions, there are many reasons for this but, Google names only a few of them and they are as listed—

- The firewall or DoS protection system is configured incorrectly;

- Googlebot has been intentionally blocked from accessing the website &

- There are DNS problems and Google cannot communicate with the DNS server.

After you’ve checked for issues and figured out which blocked resources are displayed in the tester tool, you can test again and see if your website is now running free of issues and errors.

Verify the Number of Indexed Pages

Many experts in content marketing and SEO including James Parsons explain the critical importance of the indexing phase for a website. “Indexed web pages are an important part of a website’s Internet search engine ranking and web page content value,” he said.

Indexed pages are those that are scoured by Google search engines for possible new content or for information it already knows about.

Regarding the status of your indexed pages, the Search Console tool can provide a lot of informative information. However, note that the ideal situation would be that the number of pages indexed is the same as the total number of pages on your website.

Note: You can also use the Site Audit Tool to view URLs that are marked with a no-index tag.

Add Breadcrumbs for Better Navigation

Another suggestion for a site audit is that you can implement breadcrumbs in your website for the purpose of leading the user through the website. Well, because breadcrumbs help visitors understand where they are located on the website and give directions for easier access.

To be more precise, breadcrumbs can improve user experience and help search engines have a clear picture of site structure.

Not only that, but breadcrumbs can also reduce the number of actions and clicks (that a user must take) on a page. So, this way, instead of going back and forth, users can just use the link, level, or category to navigate where they want.

Note: Breadcrumbs is a technique that can be applied to large websites or e-commerce sites via the Breadcrumb NavXT plugin or Yoast SEO, etc.

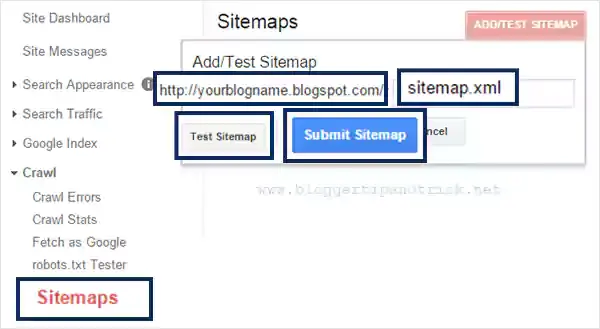

Review Your XML Sitemap to Ensure Not Being Outdated

Whether you know it or not, an XML sitemap tells Google how your website is organized. It uses crawlers to read and understand a website structure in a more accessible way. A good structure means better crawling and vice versa.

With this in mind, you can use the Search Console tool to review your sitemap. When you open the tool, you will find the Sitemap report in the Crawl section. Now, there you can add, manage and test your sitemap file.

Regarding it, you have two options and that is, either you can test a new sitemap or you can test the previously added sitemap.

In the former case, execute the following steps—

- Enter the URL of the Sitemap in the Add/Test Sitemap text field.

- Select the Test Sitemap option and refresh the page (if required).

- Click on the Open Test Results on completion of the test to check errors.

- If there are any, fix your errors.

- And, after you fix your errors, click on the Submit Sitemap.

In the latter case, since you can test an already submitted sitemap, click on Test and check the result. After checking the result, you have to do three things—

- Update the sitemap on your site occasionally or when new content is added.

- Periodically clean it, and remove old and outdated content.

- Break the sitemap into smaller parts to keep it short so that your important pages are crawled more often.

Note: The sitemap file cannot contain more than 50,000 URLs so, it must not be larger than 50MB (uncompressed).

Optimize Your Crawl Budget

You will understand the importance of optimizing your crawl budget after reading the article “DeepCrawl the importance of optimizing your crawl budget” by Maria Cieślak, a Search Engine Optimization Expert.

So, instead of wasting time explaining that, let us show you what are the recommended actions for optimizing the crawl budget:

- Prevent indexing for low-quality and spammy content.

- Check for soft 404s and fix them with the help of a personalized message and a custom page.

- Delete hacked pages.

- Keep your sitemap up to date.

- Fix infinite space issues &

- Get rid of duplicate content to avoid wasting the crawl budget.

Do Not Use Meta Refresh to Move A Website

As earlier, we’ve talked about the redirection scheme for moving a site, it’s time to understand why Google doesn’t recommend using Meta Refresh to move a site.

With respect to this first, let us tell you that there are three ways to define website redirect and they are listed—

- HTTP responses with a status code of 3xx.

- JavaScript redirections using the DOM.

- HTML redirections using the <meta> element.

But, Aseem Kishore, owner of Help Desk Geek.com, explains here better why it’s a good idea not to use this Meta Refresh technique and rather, suggests disabling it. So, if you want to move a site, consider the following Google Guidelines recommended steps—

- Read and understand the basics of moving a website.

- Build a completely new site and test it.

- Create URL mappings from existing URLs;

- Correctly configure the server to make the redirects to move the site.

- Monitor traffic for old and new URLs.

Flash Site Must Use Redirect to the HTML Version

If you’re building a Flash site without redirecting to the HTML version, this is considered a big SEO mistake because Flash can be an attractive form of content, but like JavaScript and AJAX, it’s difficult to render.

In simple words, there’s no point in a pretty site when Google can’t read it and show it the same way you’d want it to! Therefore, it is recommended that the Flash site must redirect to the HTML version.

Note: You can create an HTML version with SWFObject 2.0 as this tool helps you customize Flash content.

Use Hreflang for Language and Regional URLs

For those who don’t know, Hreflang tags are used for language and regional URLs. Therefore, it is recommended to use the rel=” alternate” hreflang=”x” attribute to display the correct language or regional URL in search results in these situations:

- For user-generated content: You keep the main content in a single language and use translation templates (navigation and footer).

- For a website that uses the English language targeted to the US, GB, and Ireland: You have smaller regional variations with similar content in the single language.

- For websites where you have multiple language versions of each page: You have site content that has been fully translated.

Regarding this, former Developer Programs Tech Lead, Maile Ohye also explains how site owners can transition to new languages and keep search engine friendly:

- It doesn’t have missing confirmation links: If page 1 links to page 2, then page 2 must link back to page 1.

- It doesn’t have incorrect language codes: Language codes must be used in ISO 639-1 format and optionally in region ISO 3166-1 alpha 2 format.

Note: Depending on these options, you can apply multiple hreflang tags to a single URL. However, make sure that the hreflang provided is valid.

Test On as Many Platforms and Devices as Possible to Ensure Your Website Working Properly

If you want your users to have a great experience, you need to test your website on multiple devices because different people use different platforms and devices.

So, that being said, you can test it on Windows, iOS, Linux, Firefox, Safari, Edge, Opera, Chrome, and as many platforms and devices as you can!

Now, let’s suppose you’re using Chrome, so to start testing it on Chrome, you need to follow these steps—

- Open Chrome by right-clicking and hitting Inspect.

- Then, toggle off the Device Toolbar.

- After that, choose the type of device on which you want to view your site.

Note: You can also use third-party tools, such as ScreenFly.

“This is how you can go through the functional elements of your website to check and resolve issues related to crawling, mobile-friendliness, navigation and indexing status, using redirects and making the website accessible to Google.”

Optimization of Website Content

Last but not least, now that you know how to fix the general issues related to crawlability and indexability, it is time to pay more attention to the specific issues related to your content, such as broken pages, internal linking, etc.

Note: that this is very important if you really want to surpass your competition, especially in highly competitive markets.

Redirect | Replace Broken Images, URLs & Resources

There is a famous saying—A picture is worth a thousand words but sometimes it happens that when the images of the webpage are not available, a broken image is displayed in the client’s browser.

Well, the solution to this problem would be to add an error handler on the IMG tag like this—

<img src=”https://www.example.com/broken_url.jpg”onerror=”this.src=’path_to_default_image'” />

While some webmasters say that Chrome and Firefox recognize the images when they are not loaded and log it to the console, others have a different take on it.

Anyway, what is important is that Sam Deering, a web developer specializing in JavaScript and jQuery, provides these great steps to solve such issues—

- First, find some information on the current images on the page.

- Then, use AJAX to test if the image exists.

- After that, refresh the image.

- Next, fix broken images using AJAX.

- Finally, check the Non-AJAX function version

Similar, is the case with broken URLs. While nothing strange will appear on the site, if your visitors click on the broken link, they will simply have a bad experience.

To avoid this, you can go to the ‘Architecture section’ in the Site Audit Tool and see what resources are broken on your website.

Audit Internal Links to Improve & Rank Higher

Another way to optimize your content is to use internal links which are links between your pages’ connection. They can build a strong website architecture by spreading link juice, or link equity, as others say!

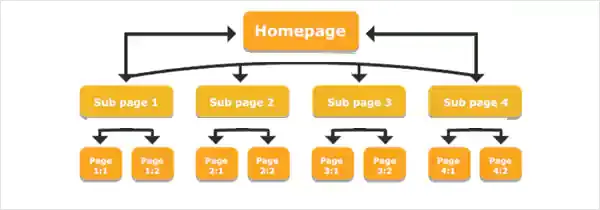

Making connections between similar materials is related to the term ‘Silo Content.’ This method defines a hierarchy and presumes to group topics and content based on keywords.

Referring to the above image, note that—

- Homepage—is the most important page.

- Sub Page—is the next most important.

- Page—is the third and least important.

Now, in order to be specific, let’s mention that there are a lot of advantages for building internal links because it—

- Organizes Site Architecture.

- Opens the way for Search Engines by making Spiders accessible.

- Transfer Link Juice.

- Improves User Navigation and provides additional information to the user.

- Organizes pages by Keyword used as Anchor Text.

- Highlights the most important Pages and transfers this information to Search Engines.

Note: Whenever you audit internal links, there are four things that you must check thoroughly and they are— Broken links; Redirected links; Click depth, and Orphan pages.

Place An Appropriate Number of Links On-Page

UX has a significant impact on the number of links on a single website page. Also, people in the web community often associate those pages (which have 100 or more links) with “link farms”.

Needless to say, a piece of content with abundant links will distract users and fail to provide them with any information as much of it is linked. So, keeping that in mind, make sure to add the link only—

- where you think it is relevant,

- When it may provide additional information

- When you need to specify the source

To learn more about this, you can read an article on Varvy by Patrick Sexton, Googlebot Whisperer, he also explains why it is important to have a fair number of links per page.

But, generally speaking, the more links there are on a page, the more organized that page needs to be to get the information (for which the user came for).

Note: Be careful to search for natural ways to add links and don’t violate Google’s guidelines for link building. And, remember that the same recommendation applies in the case of internal links also.

Highlight Your Content With Structured Data

You can consider highlighting your content using structured data because structured data is one of the effective ways to ensure that Google understands your content. Not only this, it also helps users’ directly reach and choose the page that interests them through rich search results.

Therefore, it would not be wrong to say that if a website uses structured markup data, Google can display the same in the SERP.

And, in addition to rich snippets, structured data can be used to—

- Be featured in a knowledge graph.

- Receive beta releases.

- Help Google deliver results from your website based on contextual understanding

- Have benefit from AMP, Google News, and more.

Note: The language for structured data is schema.org. It helps webmasters to mark up their pages in such a way that can be understood by all the major search engines.

More to mention, if you want to get your site’s page in rich search results then, you should use one of these supported formats to highlight content—

- JSON-LD (recommended).

- RDFa.

- Microdata.

After highlighting your content using structured data, it is recommended that you must test it using the Google Structured Data Testing Tool. That’s because testing it will give you great instructions to see if you’ve set it up correctly or if you haven’t followed Google’s guidelines.

Note: For not following Google’s guidelines, you could be penalized for spammy structured markup.

Avoid Making Use of Duplicate Content

Lastly, when talking about technical SEO, how can one forget about duplicate content, which is a serious problem?

On this, Jayson DeMers, founder and CEO of AudienceBloom, also said on Forbes that duplicate content can affect your website, discourage search engines to rank your website, and lead to poor user experience.

So, you really should avoid using duplicate content. For that, be prepared and review your HTML fixes from Search Console to remove duplicates.

Also, make sure to keep unique and relevant title tags as well as meta descriptions within your website by viewing the Search Console on ‘Search Appearance > HTML Improvements.’

In the Search Console, you can find a list of all duplicate content that takes you to pages that need improvement. Once you’re there, make sure you review and remove each such duplicate element and prepare other titles and meta descriptions.

Well, no wonder why because Google loves fresh and unique content.

Besides that, another option would be to apply the canonical tag to pages with duplicate content.

Note: Canonical tags are tags that will show search engines the original source with your rel=canonical tag.

“That’s how you can improve and optimize your website content to resolve critical technical SEO issues by removing duplicate content, replacing missing information and images, creating a robustly structured website, highlighting your content, and making it visible to Google.”

16-Steps SEO Audit Inspection Points to Look Into!

- Step 1: Google Checks. Is your website following everything Google-related?

- Step 2: Benchmarks Checks. For setting all baselines. Understand where you’re starting from!

- Step 3: Site Architecture Checks. To ensure that visitors and Googlebot have all they need to navigate and crawl.

- Step 4: Technical Inspection Checks. To identify hidden issues that you might not even notice.

- Step 5: Competitor Analysis Checks. Are you clear about who you are up against?

- Step 6: Website Images Analysis Checks. Make sure your images are working for you, not against you.

- Step 7: Mobile Audit Checks. With mobile as popular as it is, make sure there are no problems.

- Step 8: Page Level & Element Checks. Best practice for your site and visitors.

- Step 9: User Experience (UX) Checks. A known ranking signal. Are you offering Good UX to keep your visitors happy?

- Step 10: Keyword Analysis Checks. Are you using the right keywords in the right places?

- Step 11: E.A.T Analysis Checks. EAT Analysis of known signals to help improve Expertise, Authority & Trust.

- Step 12: Backlink Audit Checks. A top-known ranking signal. Are you being held back by bad backlinks?

- Step 13: Local SEO Inspection Checks. Most websites rely on local traffic. Are you missing out on this?

- Step 14: International Checks. Do you cater to an international audience? Are all elements in place?

- Step 15: Negative Practices Checks. Is it easy to still find yourself with negative practices on your site?

- Step 16: Content Analysis Checks. Is your unique & amazing? You could be setting yourself up for issues.

The SEO Audit Checklist’s Spreadsheet to Check Out 225 SEO Audit Inspection Points

The SEO Audit Checklist’s Spreadsheet Link: https://docs.google.com/spreadsheets/d/1pMW95zenRkx7Dra-qvnYApKzaMo4Z71HGLJ7hXDEw68/edit#gid=0

| Checks | Tools |

| (13 checks) | |

| Is Google Analytics Installed? | gachecker.com |

| GA duplication checks | GA Debug Extension |

| Is Search Console set up? | GSC |

| Are there any Search Console errors? | GSC |

| Google cache analysis | cache:’ in browser |

| Is there a sitemap.xml file? | Sitemap Check |

| Is there a discrepancy between indexed pages in Google and Sitemap? | Check Sitemap in GSC and site:in Google |

| Are any invalid pages in the sitemap? | Screaming Frog |

| Are there any negative search results for the brand? | Google / Visual |

| Are there any negative Google Autosuggest? | Google / Visual |

| Is there a Google News sitemap.xml file? | GSC |

| Any manual actions? | GSC |

| Are there any crawl errors? | GSC |

| BENCHMARKS | (6 checks) |

| Total Pages Indexed in Google | Site: in Browser |

| Total Number of Backlinks | Ahrefs |

| Total Number of Linking Root Domains | Ahrefs |

| Total Number of Organic Keywords | GSC / Ahrefs / SEMRush |

| 20-50 Top Keyword Positions | GSC / Ahrefs / SEMRush |

| Domain Age | webconfs.com/web-tools/domain-age-tool/ |

| COMPETITOR ANALYSIS | (6 checks) |

| Top 3-5 competitors identified? | Client / SEMRush / Ahrefs |

| Has the competition been benchmarked? | Visual / Export |

| Competitor top 10 keywords | Visual / Export |

| Competitor average search positions | Visual / Export |

| Top ranking keywords | Visual / Export |

| Competitor Content Analysis | Ahrefs Content Explorer |

| SITE ARCHITECTURE | (23 checks) |

| Site protocol checks | Most Crawling Tools |

| Pagination checks | Screaming Frog |

| Canonical checks | Screaming Frog |

| Print version Noindexed? | Visual |

| Has Internal Linking been classified on primary pages? | Screaming Frog / SEMRush |

| Site Visualisation Checks | Screaming Frog / Sitebulb |

| Internal redirects | Screaming Frog |

| Redirect chains & Redirect loops | Screaming Frog |

| Robots.txt present? | Visual |

| Robots.txt review | Visual / SC |

| Are pages being correctly blocked by Robots.txt? | Visual |

| Are pages being correctly blocked by Meta Robots? | Screaming Frog |

| Site Structure & Silo Use | Screaming Frog / Sitebulb |

| Category Use (ecomm) | Visual |

| URL naming convention – is this well-optimized? | Most Crawling Tools |

| Error Pages | Screaming Frog |

| Is an HTML Sitemap in use? | Visual / Screaming Frog |

| Are Tag Pages being used? | Most Crawling Tools |

| Is the site using a crumb trail? | Visual |

| Is primary navigation easy to use? | Visual / UX |

| Footer navigation checks? | Visual |

| Is all good content under 4 clicks from home? | Most Crawling Tools |

| Menu setup and use | Visual |

| TECHNICAL INSPECTION | (32 checks) |

| Primary Protocol Use (HTTP / HTTPS)? | Most Crawling Tools |

| Does the site have a valid SSL certificate? | Chrome / sslchecker.com |

| Do all pages redirect from HTTP to HTTPS correctly? | Most Crawling Tools |

| Is an HSTS policy in place? | securityheaders.com |

| Does the site use Subdomains? | Most Crawling Tools |

| Does the site carry a Favicon? | Visual |

| Site Uptime | uptimerobot.com |

| Broken / Redirected Internal Links | Most Crawling Tools |

| Broken / Redirected External Links | Most Crawling Tools |

| Javascript Use | Screaming Frog / Visual |

| Is the .htaccess file configured correctly? | Visual |

| Are Dynamic Pages being served correctly? | Most Crawling Tools |

| Does the site have open dynamic pages that can be blocked? | From Crawl Data |

| Malware & Security Checks | sitecheck.sucuri.net |

| Blacklist check | mxtoolbox.com & ultratools.com/tools/spamDBLookup |

| Site Speed Checks | webpagetest.org / GTMetrix |

| Are any pages being duplicated due to poor architecture? | Most Crawling Tools |

| Structured Data & Schema Use | Google Testing Tool / SC |

| Are there any Chrome Console Errors? | Chrome Inspect |

| Is CSS being minified? | seositecheckup.com/tools/css-minification-test |

| Is Inline CSS being used? | Visual |

| Is every site page secure and without errors? | Screaming Frog |

| Are there any canonical errors? | Most Crawling Tools |

| Are all ads and affiliate links nofollowed? | Most Crawling Tools |

| Can the site be crawled and used without Javascript on? | Chrome Web Developer Extension |

| Server location by IP | iplocation.net |

| Check all sites on webserver | viewdns.info/reverseip/ |

| Do any pages have more than 100 external links? | Most Crawling Tools |

| What platform is the site built on? | builtwith.com |

| Does the platform come with known restrictions? | Research |

| Is a CDN in use? | builtwith.com |

| Check domain history | SEMRush / Wayback Machine |

| WEBSITE IMAGE OPTIMISATION | (7 checks) |

| How many images are used sitewide? | Most Crawling Tools |

| Are images being optimized? | Most Crawling Tools |

| Are ALT tags being regularly used? | Most Crawling Tools |

| Are images named sympathetically? | Most Crawling Tools |

| Are there any dead images? | Most Crawling Tools |

| Are too many stock images used? | Visual |

| Are there any images in excess of 100Kb? | Most Crawling Tools |

| MOBILE AUDIT CHECKS | (15 checks) |

| Responsive check | Google / responsinator.com |

| Popups/Interstitials | Visual |

| Mobile Page Size | webpagetest.org |

| Image use | Visual / webpagetest.org |

| Image optimization | webpagetest.org |

| Image resizing | webpagetest.org |

| Search console errors | GSC |

| AMP Check | Screaming Frog / SC / validator.ampproject.org |

| Mobile UX issues (see UX) | Visual / Hotjar / Yandex Metrica |

| Mobile Navigation | Visual |

| Use of video on mobile | Visual |

| Are buttons and links easy to click? | Visual / GSC |

| Is the Favicon being displayed in mobile SERPs? | Visual |

| Parity checks – Content, Meta & Directives the same as desktop? | SF – 2 Crawls + Export |

| Mobile Testing | Mobile Moxie |

| PAGE LEVEL & ELEMENT ANALYSIS | (27 checks) |

| Are any Deprecated HTML Tags being used? | seositecheckup.com/tools/deprecated-html-tags-test |

| HTML Validation | validator.w3.org |

| Accessibility Checks | https://www.webaccessibility.com/ |

| CSS Checks | From Data |

| PAGE TITLES | |

| — Are all page titles under 65 characters? (570 pixels) | Most Crawling Tools |

| — Are any Page Titles under 30 characters? | Most Crawling Tools |

| — Are any page titles being duplicated without canonical/pagination? | Most Crawling Tools |

| — Any signs of keyword cannibalisation? | Most Crawling Tools |

| — Is the primary keyword/phrase close to the start? | Most Crawling Tools / Visual |

| — Are page titles descriptive of the page content? | Most Crawling Tools / Visual |

| — Are any page titles missing? | Most Crawling Tools |

| META DESCRIPTIONS | |

| — Are all Meta Descriptions unique and descriptive? | Most Crawling Tools |

| — Are any Meta Descriptions missing? | Most Crawling Tools |

| — Are any Meta Descriptions being duplicated without canonical/pagination? | Most Crawling Tools |

| — Are any Meta Descriptions below 70 characters? | Most Crawling Tools |

| Are Meta Keywords in use? | Most Crawling Tools |

| Are there any redirects other than 301? | Most Crawling Tools |

| Are there any 5xx errors? | Most Crawling Tools |

| Are images ALT tags in use? | Most Crawling Tools |

| Are there too many ads on any pages? | Visual |

| Does the site bombard you with popups? | Visual |

| Does the site carry a clear Call to Action? | Visual |

| Does each page have a clear H1 tag? | Most Crawling Tools |

| Are H2’s being used across the site? | Most Crawling Tools |

| Is the site W3C Compliant? | validator.w3.org |

| Does the site: brand show expected site links? | Google / Visual |

| Is the site using a Cookie Acceptance notice? | Visual |

| KEYWORD ANALYSIS | (10 checks) |

| Site keyword research for Benchmarks | Most Keyword Tools |

| Brand search – Does the homepage come up #1 when searched? | Google /Visual |

| Primary homepage term | Site |

| Is a keyword strategy in place? | Client / Research |

| Is there evidence of keyword duplication or overuse? | Most Crawling Tools |

| Are keywords in Page Titles? | Most Crawling Tools |

| Are keywords in H1? | Most Crawling Tools |

| Are keywords in H2? | Most Crawling Tools |

| Are keywords in Meta Description? | Most Crawling Tools |

| Are keywords in the main page document? | Most Crawling Tools |

| E.A.T ANALYSIS | (28 Checks) |

| MC (Main Content) Quality | Visual |

| Enough high-quality MC? | Visual |

| Positive reputation for website or content creator? | |

| Distracting ads/ supplementary content? | Visual |

| Do pages answer questions/search intent? | Visual |

| Site spelling & grammar check | Check dog + others |

| Do any pages lack purpose? | Visual |

| Average internal links without 404 | Page Optimizer Pro |

| Average total internal links | Page Optimizer Pro |

| About Us page present? | Page Optimizer Pro |

| Twitter Card added? | Page Optimizer Pro |

| Are contact details complete? | Page Optimizer Pro |

| Is a Blog page present? | Page Optimizer Pro |

| Social Media links added? | Page Optimizer Pro |

| Customer Service page/Contact/FAQ/Help | Page Optimizer Pro |

| Twitter Description Tag present? | Page Optimizer Pro |

| Organization Schema added? | Page Optimizer Pro |

| Privacy Policy page and link? | Page Optimizer Pro |

| Clickable Email link? | Page Optimizer Pro |

| Person Schema present? | Page Optimizer Pro |

| Meet The Team page live? | Page Optimizer Pro |

| Terms of Service page? | Page Optimizer Pro |

| Link to Author page? | Page Optimizer Pro |

| More than one email on-site? | Page Optimizer Pro |

| Cookie Policy/GDPR page live? | Page Optimizer Pro |

| Trademark link in the footer? | Page Optimizer Pro |

| Are Authority Outbound links present? | Page Optimizer Pro |

| Meta Author tag used? | Page Optimizer Pro |

| CONTENT AUDIT | (16 checks) |

| Are all fonts large enough to read clearly? | Visual |

| Are hyperlinks clear? | Visual |

| Could font color be considered too light? | Visual |

| Are there clear primary and supplementary content types? | Visual |

| Is content Evergreen or Fresh? | Visual |

| Are there any thin pages? <200 words of content? | Most Crawling Tools |

| Does the site carry an up-to-date Privacy Policy? | Most Crawling Tools |

| Does the site carry up-to-date TOS’s? | Most Crawling Tools |

| Is there any duplicate content internally? | Most Crawling Tools |

| Is there any duplicate content externally? | Siteliner |

| Is any content scraped from external sources? | Siteliner |

| Is the contact page easy to find and use? | Visual |

| Content Gap Analysis | Ahrefs |

| Copy and classification checks | uclassify.com/browse |

| Has page grammar been checked? | Grammarly |

| Has page spelling been checked? | Checkdog / Grammarly |

| USER EXPERIENCE (UX) | (8 Checks) |

| The site video uses | Visual |

| Homepage check | Visual |

| Internal page checks | Visual |

| Contact page checks | Visual |

| 404-page check | Visual |

| Category Pages (ecomm) | Visual |

| Mobile UX Priorities | Visual |

| Review live site usage | Hotjar / Yandex Metrica |

| BACKLINKS & ANALYSIS | (8 checks) |

| Backlink health & Score | Ahrefs |

| Spammy domains | Ahrefs |

| Has a disavow file been created? | GSC |

| Has to disavow file been checked? | Visual / Ahrefs |

| Anchor Text Use | Ahrefs |

| Backlinks lost | Ahrefs |

| Broken Backlinks | Ahrefs |

| Are there a large number of backlinks from 1 domain or more? | Ahrefs |

| INTERNATIONALISATION | (9 checks) |

| Does the site have an international audience? | Client / Research |

| Is the site using rel=”alternate” hreflang=”x” ? | Most Crawling Tools |

| Is the site being translated without errors? | Visual |

| Is the site using an international URL structure? | Most Crawling Tools |

| Are the correct localized web pages being used? | flang.dejanseo.com.au / Research / Client |

| Does the site have backlinks from target countries? | Ahrefs |

| Is the site Multilingual, Multiregional, or both? | Client / Research |

| Does the site location need to be set up in Search Console? | GSC |

| Checks from international locations | Browseo & International IP’s |

| LOCAL SEO AUDIT | (9 checks) |

| Does the site need and gain traffic from local audiences? | Client / Research |

| Are local title tags being used? | Most Crawling Tools |

| Is there a consistent NAP across the site and external sites | Visual / Ahrefs |

| Is local structured data being used? | Visual |

| Is there a Google MyBusiness listing? | Google My Business |

| Is the site listed in reputable business directories? | Research / Ahrefs |

| Does the site have local citations? | Research / Ahrefs |

| Does the site carry good local content? | Visual |

| Does the site have too many thin contents local pages? | Most Crawling Tools |

| NEGATIVE PRACTICE | (8 checks) |

| Hidden text | Crawling Tools & Research |

| Cloaking | duplichecker.com/cloaking-checker.php |

| Doorway pages | Most Crawling Tools |

| Meta Refresh | Screaming Frog |

| Javascript redirection | Screaming Frog |

| Link Exchanges | Ahrefs / Research |

| Is Flash being used? | Screaming Frog |

| Are iFrames in use? | Screaming Frog |

Bottom Line

That’s all! Hopefully, you find this Technical SEO checklist that describes all the nuts and bolts of a technical site audit (from theory to practice)—helpful enough to know what technical files exist, why SEO issues come up, and how to fix and avoid them in the future. Also, now that you know some SEO audit tools, both popular and little-known, you’ll be able to conduct your website’s technical SEO audits without any hassle.

FAQs

Q. What to Do Before Starting an SEO Audit?

Ans: In website inspection, two critical keys are preparation and knowing the right questions to ask. So, consider the following—

- What is your website history?

- Has the work already been completed?

- Has any new strategy been implemented?

- Do you have time to do the audit?

- Does your website have at least 30-50 keywords to track?

Q. What Should You Ideally Cover in Your SEO Audit?

Ans: Here’s a list of what you should consider covering in your SEO audit—

- Figure out how SEO fits into your overall marketing strategy

- Crawl your website and fix technical errors

- Use Robots.Txt and Robots Meta Tags to resolve technical issues

- Check your page speed and load time

- Test your website’s mobile-friendliness

- Remove low-quality content from your website

- Get rid of structured data errors

- Reformatting URLs and 301 Redirects

- Test and rewrite your title tags and meta descriptions

- Analyze keywords and organic traffic

- Learn from your competition

- Improve content with on-page SEO audits

- Improve your backlink strategy

- Analyze and optimize your existing internal links

- Track your site’s audit results

Q. What are the tools needed to run a technical audit?

Ans: The following is a list of tools both (free and paid) required to run a proper technical audit—

| Free Tools to Run a Technical Audit | Paid Tools to Run a Technical Audit |

| Screaming Frog SEO Spider Google Analytics Google Search Console Google Pagespeed Insights HTML sitemap generator XML sitemap generator No Follow Browser plugin | Majestic SEO SEMrush Ahrefs DeepCrawl |